Last modified:

(Note: this page is a work in progress and may change over time to feature new work)

Part of my work on the MUSICA project involves modeling jazz and musical communication through improvisation. There are two aspects to this: (1) creating generative algorithms for jazz and (2) modeling communication of motifs and other musical information between musicians in interactive settings. The first situation primarily concerns the computer being able to generate convincing music in real-time, but without interaction from a human. I have primarily used Haskell to explore that direction. In the second situation, modeling musical communication, I have used Python to explore the scenario of Trading Fours: where two musicians (one of them a computer in this case) exchange 4-measure solos. My workflow often involves creating the generative algorithms in Haskell and then porting them to Python for use in human-computer interactive scenarios.

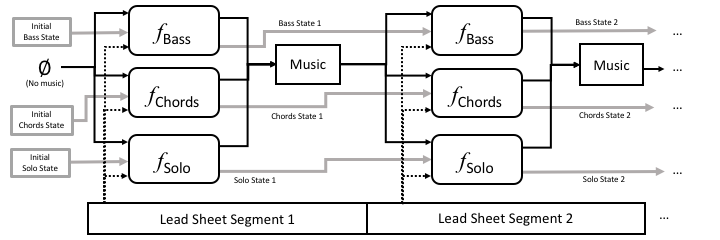

Both my the Haskell and Python implementations I work with assume the following flow of information in a typical 3-part jazz group:

where a lead sheet segment is a short fragment of music with no contextual changes. A typical jazz lead sheet can be coarsely broken into segments at the boundaries of chord changes, but it may also be segmented more finely around metrical features.

For more information on this model, see the following preprint on ResearchGate and its associated powerpoint:

A Functional Model of Jazz Improvisation

Donya Quick and Kelland Thomas

2019 Workshop on Functional Art, Music, Modeling, and Design at ICFP.

Slides (pptx with audio) | Slides (pdf)

Haskell Implementation:

The Haskell implementation is available as an installable library called Jazzkell.

GitHub: https://github.com/donya/Jazzkell

Hackage: http://hackage.haskell.org/package/Jazzkell

You can install the library from Hackage if you have Haskell Platform by using the “cabal install Jazzkell” command. The package depends on Euterpea, which will be installed automatically. To see how to use the framework, take a look at the various lhs files in the examples folder.

There is also an online, interactive demo using these models. It is available on the MUSICA Project Demos Page.

Python Implementation

While the Haskell implementation is purely focused on computer generated music, the Python implementation is intended for human-computer improvisation. The human and computer soloists can trade short melodies over a lead sheet while accompanied by multiple other computer musicians. We have looked at three styles with this framework: slow jazz with a walking bass, bossa nova, and bebop with a walking bass. The computer listens to the human’s solos, analyzes them, and attempt to incorporate features from it into its own response.

The following video shows the Python implementation in action with bossa nova:

The Python implementation is currently private to the MUSICA project and therefore is not yet publicly available.

The Algo-Jazz Series

As part of my work on generative jazz algorithms, I have produced (and am still producing) a series of compositions using my algorithms. This algorithmic jazz, or Algo-Jazz Series, has pieces with varying degrees of algorithmic content. You can hear the entire series and read details about each piece on my Algo-Jazz Series page. Hypnotize, a recent addition to the series, is an example of output from a single, contiguous run of some of my models. The code for producing Hypnotize is included in the examples folder of the Jazzkell library.

(Algorithmic visuals for Hypnotize were created with Processing)

The music possible with the framework can also get more complex. Here is a recent example in bossa nova style.

Modeling Interaction in Other Styles (and in Other Settings)

While my research on these models has been jazz-centric to date, I have also been exploring application of the same principles to other styles. The following piece is an example of the application of Jazzkell’s principles to a non-jazz style and uses two analog synthesizers.

HAILO

HAILO is an interactive Python system built in collaboration with Mario Diaz de Leon in the spring of 2019. It was inspired by the metrically free-from phrases of Gregorian chant and the works of Hildegard von Bingen and uses the same high-level generative model as is presented in previously on this page, treating the parts as functions. However, it differs from my jazz-based implementations in its assumptions of context. Unlike the jazz model, HAILO does not assume a shared harmonic context – rather, harmonic context is inferred purely from the human musician and can be changed over time. It also attempts to match metrical patterns found in the user’s input, not just those in the pitch domain. User input can come from a standard MIDI controller or from other instruments using audio-to-MIDI conversion. HAILO’s implementation is still evolving, and it will gain more features over time. HAILO was performed at Vault Allure in July 2019 and will also be performed at Electronic Music Midwest later this year.

Here are two videos of a prototype of the system. The full version of the piece when performed by Mario on guitar is more complex and with very diverse sonic textures and more complex interactions between musician and computer.

Jazzy Beach Critters

Recently I worked with Christopher N. Burrows to integrate my functional models of jazz into a game scene. For more information on this project, please see the Jazzy Beach Critters page.